A Digital Marketing Agency That Drives Your Business Forward

Is Your Digital Marketing Stuck In The Slow Lane? We'll Shift It Into High Gear!Our innovative services create unmatched growth opportunities for companies across Perth, Australia, and globally. Whatever your online goal, we’ll help you achieve it with the tenacity of a dateless cheerleader a week before prom night.

More Traffic – We’ll flood your site with buyer-ready visitors stampeding faster than the Running of the Bulls!

More Sales – Our digital marketing attracts more leads than moths swarming a bright light. They won’t be able to resist!

More Revenue – Boost revenue growth faster than a rocket blasting off for orbit! Our marketing brings the money bags.

Digital Marketing Services That Work

It's Survival Of The Fittest Out There. But We're Your Secret Weapon.We live and breathe cutting-edge digital fuel injection delivering real nitrous power. What that really means? We deliver the best digital marketing services that your business needs to outwit, outplay, and outlast your competitors, all the way to the bank.

SEO

From SEO audits to site migrations, we’ve got the SEO skills to rank you on top AND get you high-quality leads (hello revenue) with our specialist SEO arm, Perth SEO Studio.

Google Ads

Tap into Google’s marketing mojo to reach customers on the search engine they trust, with high-performing campaigns that attract and convert.

Facebook Ads

The incredible targeting capabilities of Facebook Ads have turned this social ecosystem into a marketer’s dream, and now we use it to help turn business owners’ dreams into reality.

Remarketing

Keep your brand top of mind across devices and platforms with our remarketing mastery. Your competitors, who? You’ll be the only company your future customers think of when the time is right.

Content Marketing

Create value through content marketing and you’ll receive it back in spades. Forget orange, content marketing is the new black and it’s here to stay.

Just The Beginning

Whatever you need, we can do it. Social media marketing? On it. A streamlined marketing > sales funnel? Too easy. Email automation? Done. A pretty website that is also pretty profitable? That’s why we’ve created Perth Website Studio! A multi-faceted campaign that changes to reflect the changing needs of the market, customers, and your business? You don’t even have to ask. We’re here for you.

A Marketing Agency That Puts Your Profits First

The Time For Overwhelm, Poor Results & Inefficient Marketing Is Over.More Than Just A Perth Digital Marketing Agency

The Fairy Godmother Making Your Business Dreams Come TrueWant to grow your business but your sales and marketing activities are falling flat? No time to do those much-needed marketing activities on top of everything else? Been burnt by marketing cowboys in the past and now wonder if marketing even works?

Whether you want to build an empire and achieve greatness, or just be “wealthy and free”, we offer an all-encompassing spectrum of digital marketing solutions tailored to your individual business goals.

Our results? They’re not just smoke and mirrors – they’re the real deal!

Oh, and that revenue? We’re all about increasing your average sale value, generating repeat customers, and making word-of-mouth referrals as viral as the latest dance trend on TikTok!

We’ve seen it all, from SEO nightmares to Google Ads gone rogue. We’re the problem-solvers who can turn your digital woes into success stories!

But we don’t just talk the talk, we walk the walk like a Victoria’s Secret model – just check out some of our digital marketing FAQs to see that we know what we’re talking about!

So if you’re looking for the best-of-the-best digital marketing services, then you’re in the right place. From digital marketing audits to analyse current performance and identify opportunities, through to defining the perfect digital marketing strategy to drive the best ROI, and everything in between, the results from our personalised digital marketing consulting are unrivalled.

It’s time to add a little magic to your business – chat to us about partnering with our Perth digital marketing specialists today.

Your Success Story Is This Way

Looking For Perth's Best Strategic Agency?

The Real Magic Trick? We've Been Where You Are.We understand your pain points. We’ve been in your shoes, and we know how crucial it is to find a partner that really gets it. That doesn’t just want another income stream, but that is as invested in your business success as you are.

What else makes our clients stay happily with us, year after year?

- We live and breathe digital marketing strategies, from dawn till dusk.

- Our team is a league of literal digital superheroes (to our clients anyway), ready to save your day.

- We keep it local, right here in our Subiaco office, in the heart of Perth.

- We’re all about keeping you in the loop – no more radio silence.

- No long-term contracts – we rely on our results to keep you coming back.

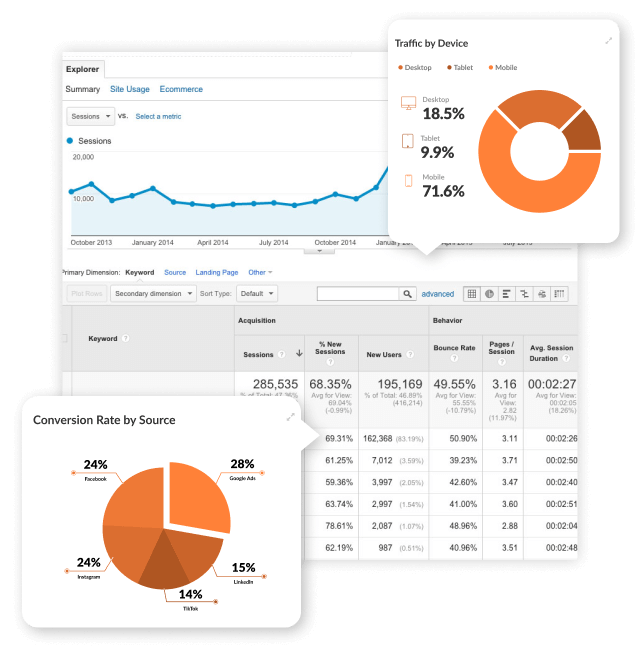

- Data-driven decisions are our bread and butter – no guesswork here.

- Your success makes us do happy little jigs in the office. We care, and we’ll prove it.

Award Companies Love Us More Than Coke (Cola)

Ok yeah, we’re that agency. Winners of Best Marketing Agency in Australia by SEMrush, and Best Small Integrated Search Agency in the Asia Pacific by the APAC Search Awards (a few times now…), plus so much more.

While our clients love the results they get, so do award companies! We’ve won awards for best website design, best B2B campaign, best social media campaign, best… you name it, we’ve won an award for it!

But we’re not just here to pat ourselves on the back; we’re here to catapult your business into the digital stratosphere! So let’s go!

The Digital Marketing Agency Perth Trusts

Trusted By These Companies (To Name A Few)

Happy Companies That Love Our Digital Marketing

We Get Results, And These Guys Love Us For It

Matt Keogh, Director

There are no magic bullets in Digital Marketing and after many years working through Digital Marketing companies and really struggling to understand what our marketing manager was actually doing on a monthly basis for us, I was relieved to touch base with Living Online.

They are completely transparent with the work done on a monthly basis, every member of their staff is responsive and every interaction we have had has been professional and results driven. If you would like to be involved and understand your marketing spend, and be able to relate the work done to results these guys are the company for you.

Gino’s Panel & Paint prides itself on being an industry leader, keeping up with the latest trends and setting the standard for modern automotive repair centres. In updating our digital profile, it was important to find the right partner to understand and promote Gino’s and what we stand for. Living Online has met the challenge in the most impactful way. Their professional and very proactive approach has dramatically lifted our online profile via SEO, social media and a superior interactive web design. From showcasing our services, suggesting new ideas to promote our business to sourcing industry news to keep our clients engaged and updated, Living Online has highlighted key features of our business which make us stand out from the rest as experts in our field.

Evan and the Living Online team are outstanding and should be considered a premium digital marketing agency. Working with Evan and his exceptional consulting team was a pleasure and their technical expertise far surpasses other digital marketing agencies. Living Online aided us with a Google Ads audit and executed a thorough SEO Migration. They also helped us with our Facebook Remarketing and conducted an extensive SEO Site Architecture review. If you are looking for experts to help you with your online marketing needs, then contact Living Online!

Trent Fleskens

No project or challenge is too hard. Engaging Living Online has kept my business on the forefront of modern marketing strategies, and our performance has reflected. Whether it’s paid advertising or sending our forgotten website presence straight to #1 organically within a year of engagement, I can honestly say I never believed Living Online could achieve what they have for my business. They proved me wrong again and again

Harmony Sanderson, Marketing Manager

Living Online will be the first, and ONLY, partner I will recommend should the opportunity arise. Still extremely happy with the services your team has provided to date – it’s been such an invaluable partnership to us, so thank you again!

You instilled such a level of confidence in us from day dot, with your thorough planning, obvious technical expertise and understanding of what needed to be done. Having a partner who just quietly worked away in the background whilst we focused on business as usual was exactly what we needed and hoped for.